AI Teaching Tips

Federal and state guidance emphasizes that the integration of AI in K-12 must be guided by strong principles that focus on maximizing potential benefits while addressing risks related to privacy, security, and fairness.

This page is designed to equip teachers with practical, high-impact strategies to align instruction confidently using these “teaching tips”, fostering AI literacy, and effectively integrating AI tools into K-12 classrooms and the school community. The goal of these tips is to help teachers move beyond reacting to AI and feel prepared to implement AI literacy skills and concepts.

We want your feedback!

Classroom expertise matters. The Florida K-12 AI Education Task Force is committed to developing practical, evidenced-based guidance for educators across the state. We invite you to review and provide direct feedback on the draft “AI Teaching Tips” working document via Google Docs. Your comments are essential as we promote flexible, practical, and real-time resources to ensure they are aligned with the needs of Florida’s teachers and students.

Creating a Generative AI Classroom Policy

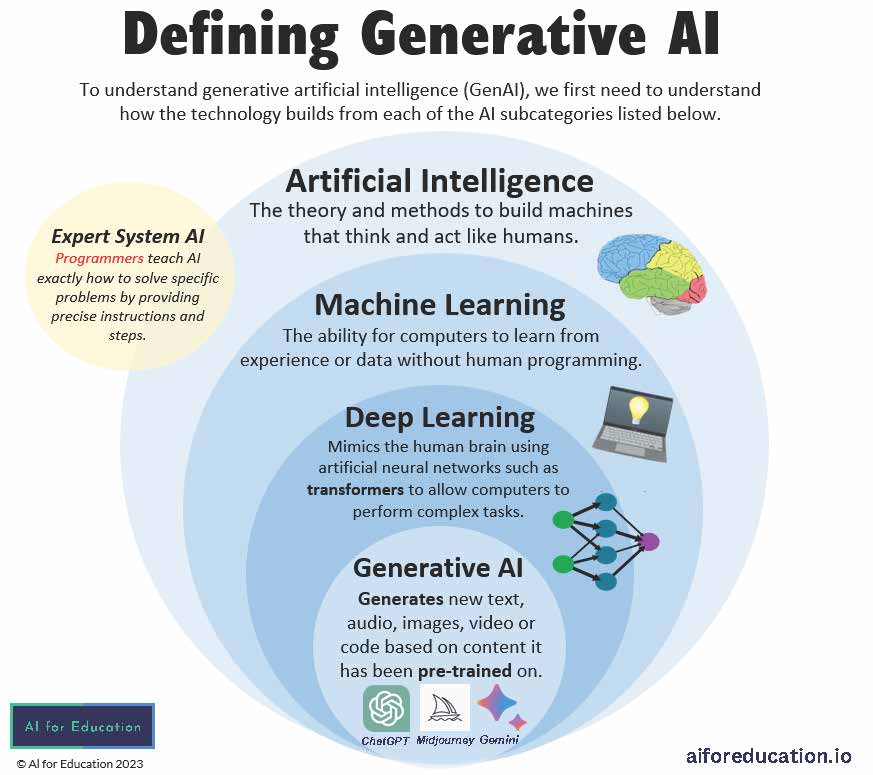

A Generative AI classroom policy is a set of clear, actionable guidelines established by the teacher and students that defines how students are permitted (or not permitted) to use Generative AI tools (like ChatGPT, Gemini, etc.) for their assignments, coursework, and learning activities.

Begin with the district Acceptable Use Policy

Purpose: to follow district compliance regulations in accordance with legal policy frameworks.

Example: “In our district’s Acceptable Use Policy (AUP), all AI tools must comply with FERPA, COPPA, and CIPA laws. Let’s discuss what this means for you as students.”

Learn More: TeachAI Policy Resources

Co-create with students

Purpose: to promote student agency and shared responsibility.

Example: “Let’s work together to create our class agreement about when and how we’ll use AI tools!”

Learn More: Building An AI Classroom Policy With Your Students, Not For Them

Define responsible AI by starting with transparency

Purpose: to clarify when AI is appropriate as a learning tool and help students understand the capabilities and limitations of AI tools.

Example: “You may use AI to brainstorm ideas or check your understanding, but all final work must be your own.” “I use AI to [what you do] because [why you use it], and I always check for [___].”

Learn More: How to Use AI Responsibly EVERY Time & AI Teaching Strategies: Having Conversations with Students

Address data privacy early

Purpose: to protect student information and build responsible habits.

Example: “Never share personal information with AI tools – no names, addresses, or private details about yourself or others.”

Learn More: The Educator’s Guide to Student Data Privacy

Build in reflection time

Purpose: to encourage metacognition about AI use.

Example: “After using AI, we’ll reflect: How did it help? What did you learn? What could you do differently next time?”

Learn More: A Decision Tree to Guide Student AI Use

Teaching Students About AI Bias

When teaching students about bias in data, consider the following definition: AI Bias is when an artificial intelligence system (like a chatbot, image generator, or grading tool) produces skewed results based on incomplete or misleading datasets.

Understand how to detect deepfakes

Purpose: to determine the negative and positive consequences of deepfakes.

Example: “How can we spot a deepfake based on attributes such as hair, eyes, side profile, and emotions?”

Learn More: Everyday ai – NSF , Unit 3: Society + AI

Test AI systems together

Purpose: to reveal bias through hands-on exploration.

Example: “Let’s all search for ‘doctor’ in an image search. What do you notice about who is shown?“

Learn More: AI Literacy Learning Scenarios for Every Subject

Discuss data sources

Purpose: to help students understand bias origins.

Example: “If an AI is trained mostly on data from one group, what perspectives might be missing?”

Learn More: 3 types of bias in AI | Machine learning

Connect to students’ lived experiences

Purpose: to increase relevance and engagement

Example: “Have you noticed recommendations on social media? Let’s discuss how bias in those algorithms affects what you see.”

Learn More: How AI Bias Impacts Our Lives | Common Sense Education

Teach auditing skills

Purpose: to empower students as critical consumers.

Example: “Let’s create a checklist to evaluate: Is this AI fair? Who might it harm? What voices are missing?”

Learn More: How do I fact-check AI search results? | Stanford CRAFT

Communicating About AI with Families

As new technologies continue to emerge, engaging parents, guardians, and caretakers is essential to build a strong partnership between school and home. Families need the knowledge of AI literacy to support their children’s success, safety, and ethical development in an AI-powered world.

Educate families

Purpose: to build understanding and partnership.

Example: “Host an AI literacy night where families experience AI tools and learn about classroom use.”

Learn More: Parents’ Night Curriculum Presentation — AI for Education

Share your school & district policy

Purpose: to promote transparency and trust.

Example: “Here’s how we’re using AI in class, why it matters, and how you can support learning at home.”

Address concerns openly

Purpose: to validate worries and provide reassurance.

Example: “Many parents share your concerns about AI. Here’s how we’re ensuring responsible, beneficial use…”

Provide resources for home discussions

Purpose: to extend learning beyond school.

Example: “Share family-friendly AI literacy resources so dinner conversations can continue the learning!”

Learn More: 5 Tips for Talking to Your Kids About AI

Invite parents to a student showcase

Purpose: to celebrate students and showcase positive AI integration

Example: “Look at this amazing project where students used an AI tool to create!

Evaluating AI Tools

Educators are the front lines of AI implementation. Student privacy and data protection is paramount when considering the “how” and “how” Generative AI is used in K-12 education. The National Education Association (NEA) provides an excellent resource for vetting AI tools to help teachers manage potential risks and evaluate pedagogical efficacy.

Check privacy policies

Purpose: to protect student data

Example: “Does this tool comply with FERPA and COPPA? What data is collected and how is it used?”

Learn More: AI Privacy and Safety Checks

Assess for bias and fairness

Purpose: to ensure positive outcomes.

Example: “Has this AI been tested with a wide range of populations?”

Learn More: AI Initiatives

Test before adopting

Purpose: to verify educational value.

Example: “Try it yourself first. Does the tool improve learning outcomes? Are there opportunities for students to create rather than consume?“

Learn More: Evaluating AI tools — aiEDU

Verify accuracy

Purpose: to ensure reliable information.

Example: “Check AI-generated content for factual errors, especially in your subject area. Are the results trustworthy?“

Consider sustainability

Purpose:to plan for long-term implementation.

Example: “What is the cost of the tool? Does it require ongoing subscription? What’s the district purchasing requirements?”